Deep neural networks often suffer from severe performance degradation when tested on images that differ visually from those encountered during training. This degradation is caused by factors such as domain shift, noise, or changes in lighting.

Recent research has focused on domain adaptation techniques to build deep models that can adapt from an annotated source dataset to a target dataset. However, such methods usually require access to downstream training data, which can be challenging to collect.

An alternative approach is Test-Time Adaptation (TTA), which aims to improve the robustness of a pre-trained neural network to a test dataset, potentially by enhancing the network’s predictions on one test sample at a time. Two notable TTA methods for image classification are:

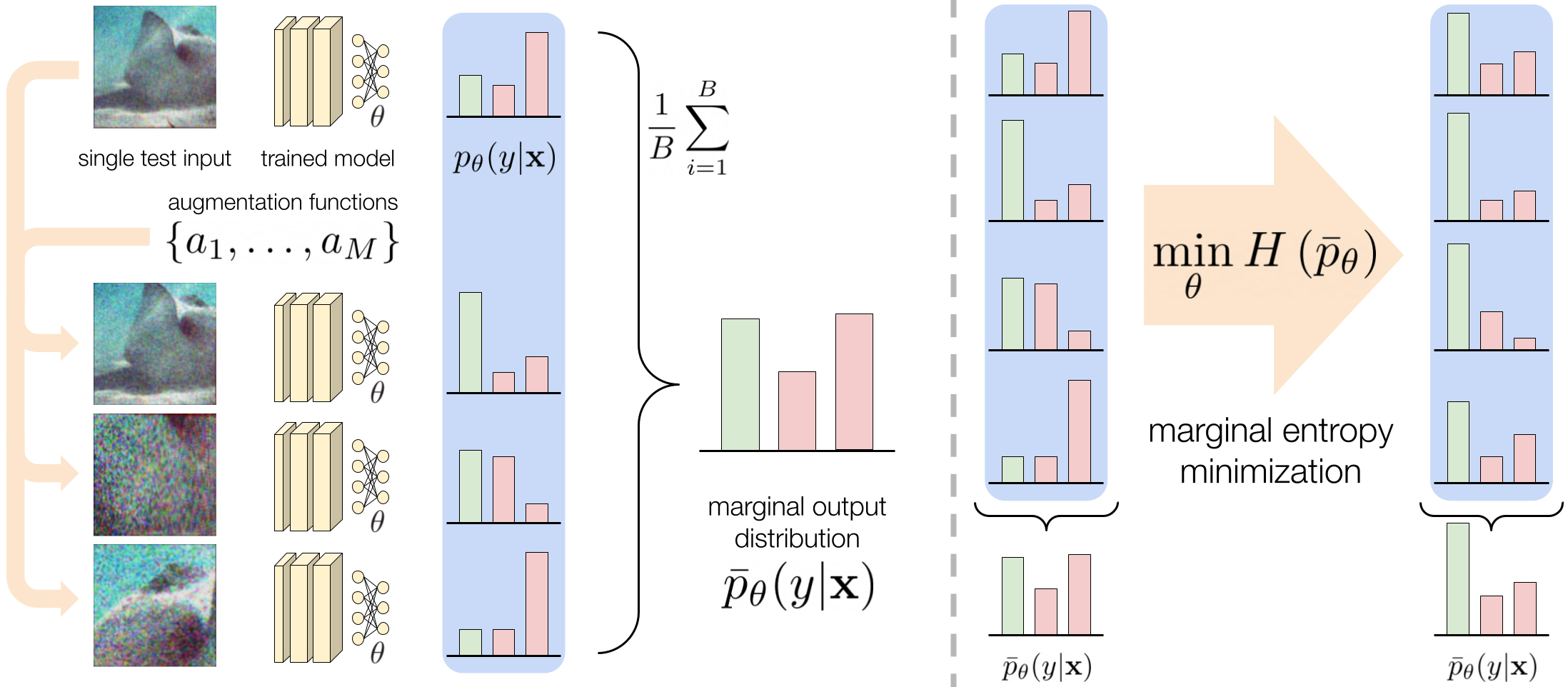

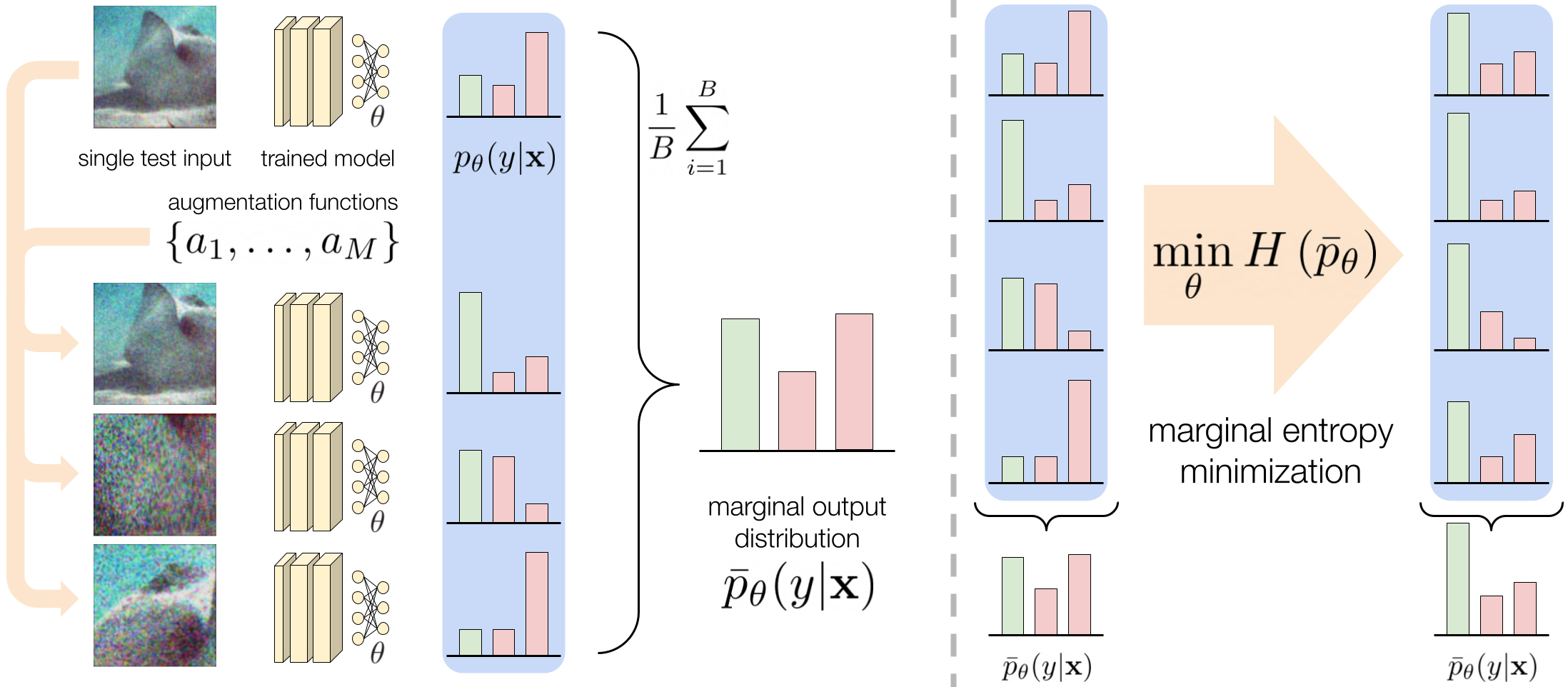

- Marginal Entropy Minimization with One test point (MEMO): This method uses pre-trained models directly without making any assumptions about their specific training procedures or architectures, requiring only a single test input for adaptation.

- Test-Time Prompt Tuning (TPT): This method leverages pre-existing models without any assumptions about their specific training methods or architectures, enabling adaptation using only a small set of labeled examples from the target domain.

For this project, MEMO was applied to a pretrained Convolutional Neural Network, ViT-b/16, using the ImageNetV2 dataset. This network operates as follows: given a test point

Let $ A = {a_1,…,a_M} $ be a set of augmentations (resizing, cropping, color jittering etc…). Each augmentation $ a_i \in A $ can be applied to an input sample

MEMO starts by appling a set of

Since the true label

- Clone the repository:

git clone https://github.com/christiansassi/deep-learning-project cd deep_learning_project - Upload the notebook

deep_learning.ipynbon Google Colab. NOTE: Make sure you use the T4 GPU.

Matteo Beltrami – matteo.beltrami-1@studenti.unitn.it

Pietro Bologna – pietro.bologna@studenti.unitn.it

Christian Sassi – christian.sassi@studenti.unitn.it

https://github.com/christiansassi/deep-learning-project

https://github.com/christiansassi/deep-learning-project

Leave a Reply